Please note:

this page is still under construction. If you

have any questions or comments, click on the "send us your

questions" link at the bottom of the page.

|

The Imager for Mars Pathfinder

(IMP) and the Surface Stereo Imager (SSI) are nearly identical.

The IMP flew on the Mars Pathfinder mission, and took more than

16,000 images of the Martian surface in Ares Vallis between July

4 and September 27, 1997. The SSI will be launched in January

1999 as part of the Mars Volatiles and Climate Surveyor package,

on the Mars Polar Lander '98 mission. In late 1999 it will

land near the south pole of Mars. In this page, for convenience,

IMP images are discussed. However, the same facts apply

to SSI images.

|

Dissecting an IMP

Image

| How

the IMP Records Images | How the IMP Filters Work |

more information is available

in How the IMP Works

Dissecting an IMP

Image

| How

the IMP Records Images | How the IMP Filters Work |

more information is available

in How the IMP Works |

Words in italics are defined

in our glossary, click on them to see their definition.

If a word is used repeatedly, only the first few usages are linked

to the glossary.

Anatomy: Dissecting

an IMP Image

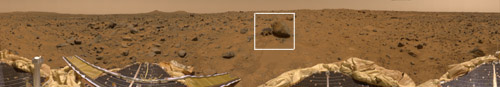

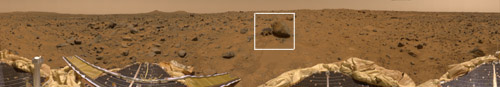

Below is the "Presidential Pan," a full color

360° panorama of the Pathfinder landing site. Many people

who have seen the Presidential Pan may not realize that this large

image is made of many small images (395!). To get an idea

of what goes into an image like this, let's examine the small

area in the white box.

(this

is a thumbnail, click for a larger version)

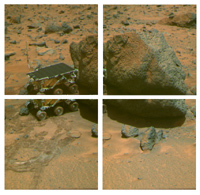

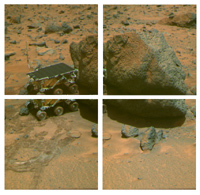

This small portion of the pan is made from four smaller

images:

(this

is a thumbnail, click for a larger version)

This small portion of the pan is made from four smaller

images:

As you can see in the four frames above, there is an

overlap of the images making it much easier to create a panorama.

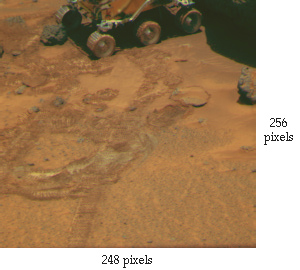

Why do we have to take small images and compile them in to a larger

image? The size of these images is determined by the size

of the CCD detector (Charge Coupled Device) -- the small

chip within the IMP, which registers reflected light so that it

can be transformed into binary code, the language of computers

and digital images. This means that images are limited to

256 x 248 pixels.

(For more information

about how the camera works, jump ahead to How the IMP Records

Images)

As you can see in the four frames above, there is an

overlap of the images making it much easier to create a panorama.

Why do we have to take small images and compile them in to a larger

image? The size of these images is determined by the size

of the CCD detector (Charge Coupled Device) -- the small

chip within the IMP, which registers reflected light so that it

can be transformed into binary code, the language of computers

and digital images. This means that images are limited to

256 x 248 pixels.

(For more information

about how the camera works, jump ahead to How the IMP Records

Images)

These individual images must be combined to create a

panorama. This is not a simple process, as corrections

must be made for parallax. Delving further into this IMP

image we discover that each 248 x 256 pixel color frame

(like the one above) actually took three images to make it.

The IMP "sees" light one color at a time, and making

what we call a color image requires red, green and blue light.

The three images below are the components that make up

the color picture that you see above.

Why do you need three black and white images to make one color

one? The IMP records images in this special way, using a

CCD detector for a few reasons. First of all, the

CCD detector images are easily transformed into digital

images in binary code, which is easily transmitted through the

solar system via radio waves. Second, scientists want to

be able to look at images in terms of numbers. With our

IMP's CCD images, we can accurately measure and compare

the brightness of individual objects in a scene.

To better understand these concepts, you might want

a short review of the nature of light.

(if you don't need a review, go to

How the IMP records Images or

How the IMP filters work)

A Short Review of

Light:

The range of light which we perceive is only a small portion

of the light which exists. When we use the term light, we

tend to think of visible light --which we see every day.

A broader definition of light is electromagnetic radiation.

Below is a diagram of the electromagnetic spectrum.

From left to right, this spectrum goes from short wavelength/high

energy forms of light, to long wavelength/low energy forms of

light. We can only see the light in the middle of the spectrum.

Short wavelength light forms like gamma rays and long wavelength

light forms like radio waves are invisible to our eyes, and we

need special instruments to detect them.

From left to right, this spectrum goes from short wavelength/high

energy forms of light, to long wavelength/low energy forms of

light. We can only see the light in the middle of the spectrum.

Short wavelength light forms like gamma rays and long wavelength

light forms like radio waves are invisible to our eyes, and we

need special instruments to detect them.

Light is essentially energy. You can think of it as a

wave or as particles. Sometimes it makes more sense to think

of it as a wave, sometimes it makes more sense to think of it

as particles. When people talk about light as particles,

they call them photons. A photon is a small clump of energy.

Each kind of light that you see in the spectrum above (gamma rays,

radio waves, etc.) carries a different amount of energy per photon.

If you think of light as photon, you can imagine that our camera

records the number of photons which fall on each compartment of

our CCD detector. The more photons which fall on

a particular component, the brighter that area of the scene is.

When the information from the CCD detector is transformed into

binary code, each small compartment of the detector becomes a

pixel.

(More information about this is available

in How the IMP records Images)

Now lets think of light as a wave. When you hear someone

refer to different "wavelengths" of light, they are

referring to the distance between peaks of the wave:

As we saw above, human eyes are not capable of perceiving the

entire range of light's wavelengths, and we refer to the relatively

small area that we can see as "visible light."

While our eyes are somewhat limited in their ability to see light,

within the range of visible light we can distinguish between certain

wavelengths. For example, we perceive the 440 nm wavelength

of light as blue, and the 670 nm wavelength of light as red.

We use nanometers (nm) to talk about the wavelengths of visible

light. A nanometer is one billionth of a meter.

We are so used to using our eyes as tools to understand the

world, that we sometimes forget how they function. When

we look around us, the colors that we see are actually reflected

light. Different materials reflect and absorb different

varieties of light. If you look at two apples, one red and

one green, the difference between the two is that the red apple

is absorbing most of the wavelengths of light, but is very good

at reflecting the long wavelengths of light around 670 nm, which

we perceive as red. The green apple, on the other hand,

is absorbing most wavelengths of light, but is reflecting near

the 530 nm wavelength which we perceive as green.

We can learn a lot about an object by what colors/wavelengths

of light are reflected. When we look at IMP images of the

landing site we see that some rocks reflect more blue light and

some reflect more red light. We can see that some of the

dust and soil in the scene is brighter than others. Because

of the way that CCD images work, we can quantify, or put into

numbers, comparisons like bright soil vs. dark soil, and through

analysis we can reach more exacting conclusions than if we could

only generalize about bright and dark.

You and I are accustomed to having our brain and eyes work

behind the scenes, putting color images together for us.

In the processing of IMP images, things were not so easy.

Our color images came to us in pieces, one could say. To

create a color image, we would have to assemble data from three

images: those taken in the red, green and blue filters.

There is more about how the filters work in the following section.

How the IMP Filters Work | How

the IMP Records Images

| How

the IMP Works | Send

us your questions about the IMP |

Return

to IMP Home page